Now that all the heavy lifting part of this is done. I wanted to write down a few little tips and points to do with this. Over all I couldn’t be happier with the way it works and how well it handles what I have thrown at it. But like with all IT (and especially IT doing quirky things), it has some things that is worth knowing before it is installed.

Lets start with what works well.

Recovery

The system is rather resilient. If one of the hosts looses power or has to be shutdown, the other host automatically assumes the roles and everything pauses for a few seconds and then resumes. It is amazing to watch the traffic flow from one host to another and all the machines remain running. (Exactly what I wanted). The sync process resumes automatically and since there is no automatic fail back, everything keeps running on the secondary until it needs to be shutdown etc. If you watch the /proc/drbd during a host reboot you can see this type of progress

#Host disconnected

=====

Every 2.0s: cat /proc/drbd Mon Jun 9 12:27:19 2014

version: 8.4.3 (api:1/proto:86-101)

srcversion: F97798065516C94BE0F27DC

0: cs:WFConnection ro:Primary/Unknown ds:UpToDate/DUnknown C r-----

ns:17138212 nr:22911428 dw:49038588 dr:27001 al:2921 bm:208 lo:0 pe:0 ua:0 ap:0 ep:1 wo:f oos:76

89476

=====

#Host reconnects

=====

Every 2.0s: cat /proc/drbd Mon Jun 9 12:27:42 2014

version: 8.4.3 (api:1/proto:86-101)

srcversion: F97798065516C94BE0F27DC

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r---n-

ns:17145756 nr:22911428 dw:49149816 dr:39973 al:2930 bm:216 lo:0 pe:6 ua:6 ap:4 ep:1 wo:f oos:77

72364

[>....................] sync'ed: 0.2% (7588/7592)Mfinish: 4:19:04 speed: 484 (484) K/sec

=====

Every 2.0s: cat /proc/drbd Mon Jun 9 12:30:34 2014

version: 8.4.3 (api:1/proto:86-101)

srcversion: F97798065516C94BE0F27DC

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r-----

ns:22633676 nr:22911428 dw:49856836 dr:4815453 al:3042 bm:629 lo:1 pe:0 ua:0 ap:1 ep:1 wo:f oos:

2918564

[===========>........] sync'ed: 62.6% (2848/7592)Mfinish: 0:02:17 speed: 21,196 (26,408) K/s

ec

=====

This usually happens seamlessly in the background.

Speed

My system really wasn’t designed to be a top of the line system. It is built from decommissioned workstations (i.e. 3 years old) and they are built from left over components (i.e. 6 and 8GB memory and Green Hard drives) The biggest bottleneck in my system is the network. Each server only has a single NIC for everything. This can cause some grief sometimes as there is just to much traffic that needs to be sent. For example once, one of the hosts was off for some time to install some new hardware. (it may have been 2 hours) Once the host reconnected a number of things occurred

- DRS started to move 3 machines back to that host

- The DRBD sync started to bring the vNas back into sync.

Those two things were just too much traffic for the connections to handle. Now it didn’t crash, what did happen is that while these were in progress, some things slowed down to a crawl. Once everything has caught up, it all went back to full speed. This isn’t a huge deal unless you had something that was mission critical running on it.

Now this could be fairly easily mitigated by a number of ways including

- Installing additional NICs into the host. (This is something I plan to do)

- Splitting the traffic into different types and throttling the traffic. i.e.

- Creating a second NIC for the vNas machines and assigning sync to occur across this link. This connection could then be throttled via the virtual switch.

- Using the DRBD sync speed to reduce the maximum sync speed.

- Splitting vMotion traffic onto its own switch and throttling it.

Given that this has only occurred maybe twice, taking into consideration my hardware setup. This isn’t an issue.

Virtual Hard drive speed.

The total available speed for virtual hard drives seems to be rather sufficient given the hardware etc used in my setup. This I am sure would increase with faster hard drives and larger network pipes between hosts.

The sync between the two vNas machines runs at full Gigabit speeds. I also believe that by changing the sync protocol you may be able to increase the disk speed at the risk of potentially loosing data in a power event. (i.e. Protocol A would be the quickest, it would also have the greatest risk)

My media machine was not able to send out multiple HD streams while running on the vNas. One stream was no problem. This wasn’t such a huge deal as it had hardware that was dependent upon a single host, so I moved it to local storage on that box. Again I believe this to be an issue with the underlying hardware rather then the setup.

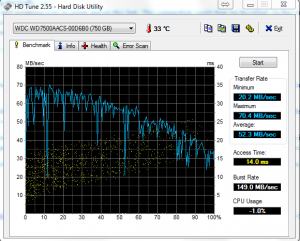

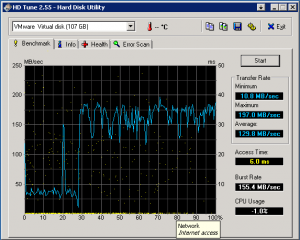

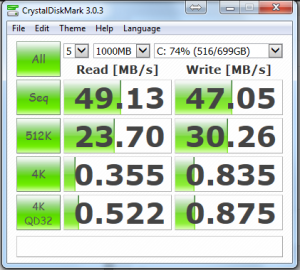

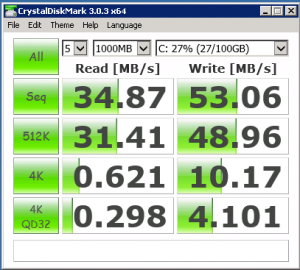

I did some bench marking on a windows box floating around on the NAS. I also did the same test on my local machine (which is no beast) for comparison.

HD Tune

| My PC | Virtual PC |

So the vNas starts out slow and at about 30 seconds get into gear it seems and jumps to about 180MB/Sec. In the end it averages higher then my local PC. That is not a result I expected.

Crystal Disk Mark

| My PC | Virtual PC |

|

|

So the local drive was quicker on reads, but when it came to writes the vNas disk takes the prize.

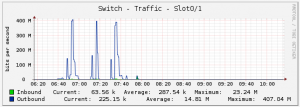

You can also see the network running at full speed while the write tests are in progress.

These results genuinely shocked me. The reads are pretty close in speed for the Crystal Disk Mark and Significantly higher (after 30 seconds) for HD Tune. In terms of Writes I was sure that the vNas would be half of the speed, but it has actually outperformed the local PC. I guess this could be put down to the under lying hardware.

I will do a part 4 with some more settings that assisted keeping the system running smoothly.