In the final part to this series of posts, I would like to outline a few of the issues that I encountered when setting up DRBD.

Split Brain

With a two node cluster, this is probably the biggest issue that arises. Split brain is when the communication between the hosts is lost and both elevate themselves to primary. This causes the data on both to be different and so the system doesn’t know which data is legit.

The process of recovery from split brain is fairly straight forward. You disconnect the node that is not the primary, set it to discard its data and then reconnect it to the cluster. (More info from http://www.drbd.org/users-guide/s-resolve-split-brain.html)

I did a few tests by turning off the networking gear between the two hosts to simulate a failure and was able to recover fairly quickly. (The main issue i believe with this was that I knew which was master before hand). In a true failure I would probably most likely suggest the node that was on the vHost box that held the HA Master role as the machines should restart on that box.

This could also be resolved with a few simple solutions such as

- Setting up a three node cluster. – You could use quorum to determine who is active. (i.e. more then 50% of the nodes need to be able to communicate to keep the cluster up)

- Redundant switches. – This would cover for switch failure.

Machine Resource Allocation

At first I found that the entire system came to a standstill on a regular basis. After looking at the whole system I came to the conclusion that because disk I/O was happening twice (once to the machine VMDK and once for the host storage). I needed to prioritize the vNas machines to ensure that they had the ability to read and write with a higher priority then the machines that were writing to them. After adjusting the resource level for CPU, Memory and disk to high rather then normal things started to smooth out.

vNas Host Memory

The machines originally started with 512 MB memory. This was increased to 1GB after I saw the machines hit 512MB during one of their syncs (or under heavy load). Under normal load the machines sit at a very low memory usage.

30MB of memory. WHAT?!?

I think that in a perfect world you could reduce the memory of these machines to 512 or even 256 given my work load. The issue with that is that when it needs more is usually when it needs to be done fast. Given that the actual consumption of memory sits around 30MB for now I will leave it at 1GB and allow the hyper visor to do its job of memory management.

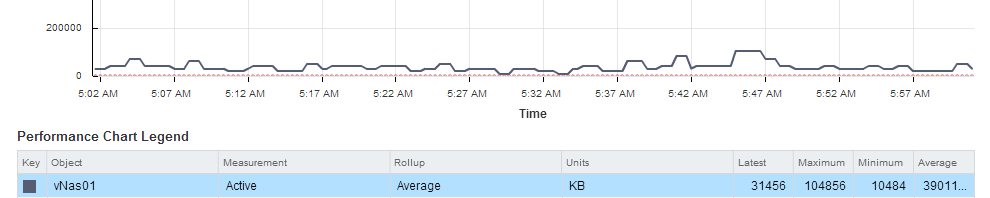

Network Traffic

Ideally you would want to split the DRBD sync traffic and the NFS traffic onto their own networks that could then be controlled by throttling or Network I/O control. This is probably the biggest issue I run into for this reason. If you slow down the sync between these two machines, the entire network slows down to keep pace. Given that I only have 1 NIC in each host there is little that can be done to fix this issue. In a production environment multiple up links would go a long way to help resolve this issue.

HeartBeat Knowledge

When I started this I was expecting to run a Primary/Primary cluster using CARP. That didn’t happen given that the NFS locks needed to be transferred between hosts. (Although this may have been able to be done with the metadata changes). A more in depth knowledge of Heartbeat and in particular the ha.cf file would be advantageous. In particular using the group ping ability to shutdown the services in the event of host isolation. (This would help resolve the split brain issues by stopping an isolated host rather then letting it elevate itself to primary)

Overall

Overall this has been a great project to work on. It has been very interesting and I am surprised by what I was able to achieve using free products. The question always comes down to could this be used in a production environment?

Of course further testing and consideration of the environment always needs to be considered but I think that it could. If this was to be used there would have to be a few things considered such as running the three node cluster to minimize data loss. The other thing would be that you would need system administrators who knew DRBD, linux etc. This one kind of goes without saying but if you wanted to run an entire private cloud from DRBD the administrators would need to know and understand why it does what it does. I am still learning this but so far it has been reliable and resilient.